Google’s John Mueller said on Twitter last week that if your sitemap ranks in Google Search for a query, it indicates that your site may have quality issues.

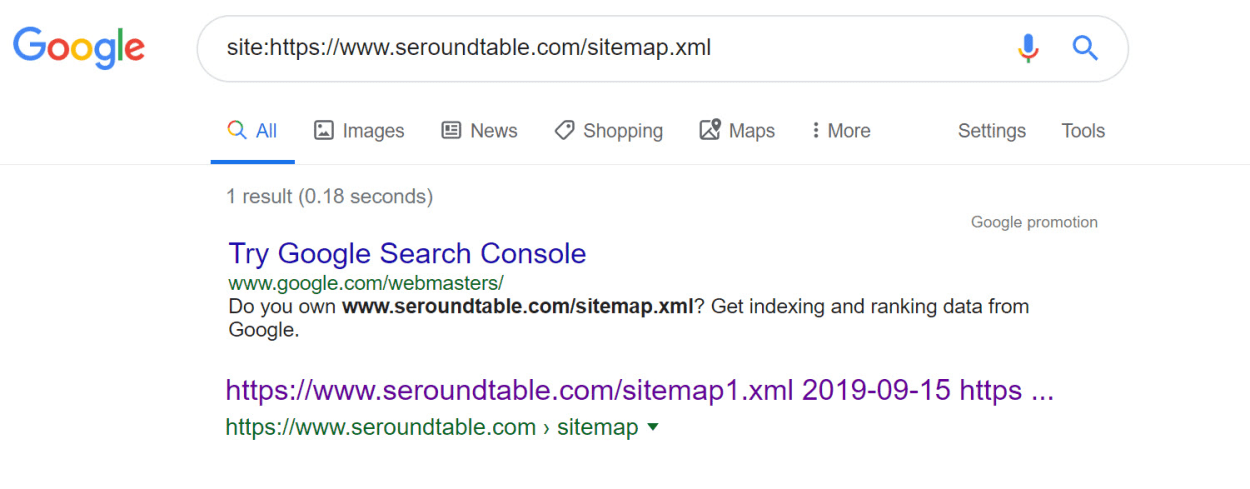

The comment came about when Twitter user Bolocan Cristian posted a screenshot of a search result, asking Mueller how he could remove the sitemap file from the search results.

Cristian followed up, asking, “Why is Google indexing URLs like sitemaps.xml?”

@JohnMu how can i remove from Google index this XML sitemap?

— Bolocan Cristian (@bolocancristian) October 30, 2019

Why is Google indexing URL's like sitemaps.xml? pic.twitter.com/wLNwajfimc

Mueller criticized the site’s content, saying, “there’s not a lot of good content that’s worth showing.”

He continued, “when XML files are seen as being more relevant than your pages, that’s often a sign of your pages being really suboptimal. I’d work on that first if this query is important.”

You can see Mueller’s full reply below:

Past that, you can use the URL removal tool for hiding URLs urgently in the search results, or use the x-robots-tag HTTP header to include a "noindex" for non-HTML content. I'd focus on the content first though, if the query is important to you.

— Hey John, Your profile caught my eye. Ouch. (@JohnMu) October 30, 2019

Mueller followed up with advice on removing the URL from the Search Engine Results Pages (SERPs).

However, he reiterated his advice to focus on content if that particular search query was important.

Is it normal for XML Sitemaps to be indexed?

I checked the sites I manage, and none of the sitemaps was indexed. I then checked some other popular sites, and a surprising number was (Seroundtable, for example).

I think it is worth clarifying that your sitemap showing up as indexed is not an issue.

The issue is when the sitemap shows in the search results when you type in a keyword relating to your site’s content. This indicates that Google thinks your sitemap is more relevant to that query than the content on your site.

The only official reference to indexable sitemaps is a web archive of an old Google Group thread from 2008.

In it, Mueller said that the file might have been linked to from somewhere, indicating that Google won’t index them unless they are crawlable from other links on the web.

Mueller said, “It does look like we have some of your Sitemap files indexed. It’s possible that there is a link to them somewhere (or perhaps to the Sitemaps Index file).”

The URL removal tool in the Search Console only removes a file for 90 days. If there is a link to it, it will most likely find its way back in the index once the 90 days are up.

The best way to stop your XML Sitemap file from being indexed

Mueller’s recommends to set the x-robots-tag HTTP header to “noindex” for non-HTML content.

I’d do it slightly differently to be more specific to the sitemap file.

On an apache server (which works with NGINX as a reverse proxy, too), the easiest way is to add the following snippet to your .htaccess file:

<FilesMatch "sitemap.xml">

Header set X-Robots-Tag "noindex"

</FilesMatch>For NGINX, you could add the following to the configuration file:

location = sitemap.xml {

add_header X-Robots-Tag "noindex";

}